Future of Cybersecurity: How AI Is Reshaping Threats and Defenses in 2026

As 2026 dawns, AI is fundamentally altering the cybersecurity landscape. Generative AI has “democratized high-level hacking”, forcing security out of the IT back-room and into board-level strategy. Global infosec spending has surged over $240 billion in 2026, up 12.5% from 2025 reflecting this urgency. Government tech leaders must prepare for AI cybersecurity 2026 as both a threat accelerator and a shield. In practice, AI enables defenders to hunt threats automatically, but also gives adversaries agentic capabilities: autonomous AI agents that can pivot through networks at machine speed. In short, AI “is the new arms race” in cyber: attackers will use it to craft realistic phishing, deepfakes, and even self-modifying malware, while defenders race to deploy AI-powered anomaly detection and real-time response. Analysts project that by 2027 about 17% of all cyberattacks will leverage AI in some way from AI-cloned voices to automated code injection.

Governments remain prime targets. High-profile cyber attacks on US government agencies have already become a serious concern. For example, a 2020 incident overloaded the U.S. Health and Human Services servers, a stark reminder that there are cyber attacks on US government agencies that can disrupt critical services. Criminals and hostile states pursue sensitive data and infrastructure control, so modern threats often combine AI-driven sophistication with geopolitical motives. This means government IT teams must deploy specialized cybersecurity solutions for government that use AI to match AI for instance, machine-learning systems trained to secure the very unstructured data that fuels agency AI models.

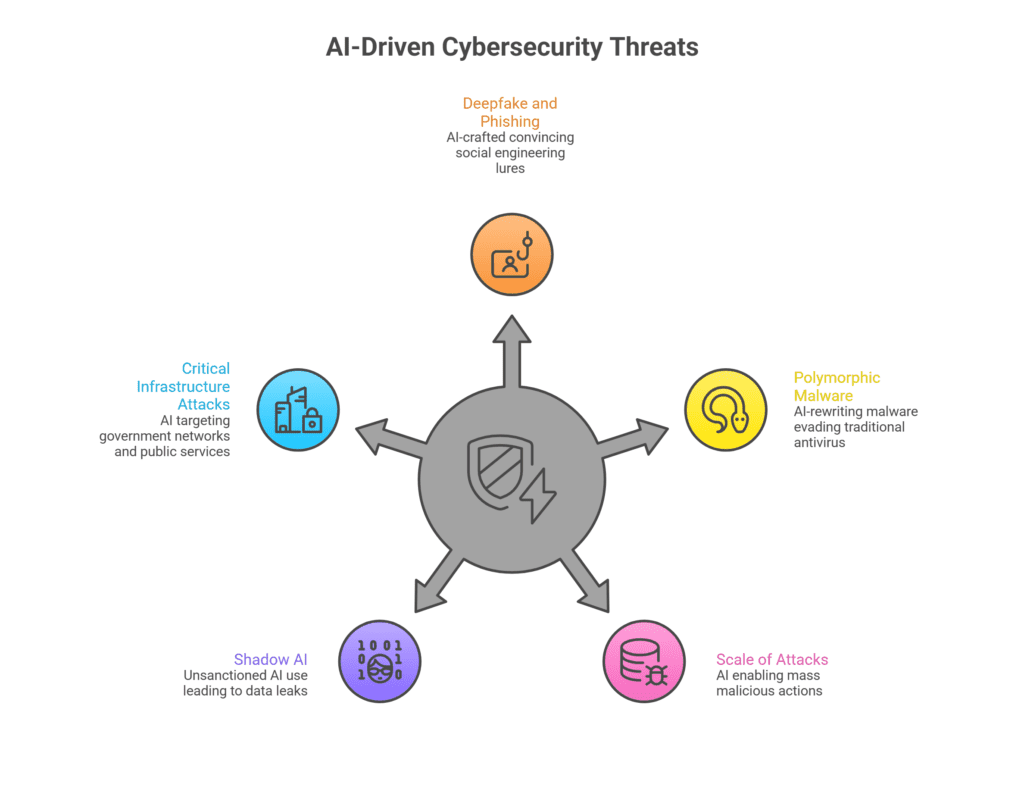

Evolving Threats in an AI-Driven Landscape

- Deepfake and AI-enhanced phishing: Attackers now use AI to craft incredibly convincing social engineering lures. Generative models can clone voices and faces or write flawless emails, making spear-phishing and CEO fraud far harder to detect. In 2025, one report found Florida had surged in AI-related cybercrime using these tactics.

- Polymorphic and Autonomous Malware: New malware strains use AI to rewrite their own code continuously. Such polymorphic ransomware evades traditional antivirus because it looks different each time. Simultaneously, autonomous bots (sometimes called “agentic” threats) scan networks and exploit vulnerabilities at machine speed. Automated supply-chain attacks where AI-driven probes compromise third-party vendors to reach bigger targets are expected to accelerate.

- Scale of Attacks: AI allows attackers to launch thousands or millions of malicious actions at once. For example, credential harvesting at scale emerges when AI systems auto-generate personalized phishing en masse, using publicly available data to spoof users contacts. These mass campaigns can overwhelm basic defenses. One projection warns that the volume and cost of AI-enhanced attacks are reaching record highs.

- Shadow AI and Data Poisoning: In the public sector specifically, unsanctioned AI use (“shadow AI”) by employees can leak data inadvertently. There’s also the risk of attackers poisoning training data or exploiting AI models directly. In essence, every powerful AI tool introduces a new attack surface.

- Attacks on Critical Infrastructure and Government: Nation-states and cybercriminals persistently target government networks. Hundreds of millions of Americans rely on public services that are increasingly online, and any breach can steal citizen data or disrupt operations. Recent studies emphasize that cyber attacks on US government networks are ongoing, and agencies must brace for more.

Together, these AI-empowered threats make legacy security insufficient. Public-sector leaders report unprecedented concerns: volatile global conflict, tightening regulations, and AI advances all converge. As one expert put it, “the cybersecurity landscape for the coming years will be marked by increasing volatility, complexity and uncertainty” due to AI and other innovations.

AI-Enhanced Defenses for Government Networks

- AI-driven Anomaly Detection: Modern security tools use machine learning to establish a baseline normal for every user and device. They then flag deviations (like login locations or data access patterns) that might indicate a breach. This UEBA (User and Entity Behavior Analytics) approach can catch insider threats or credential compromises instantly. For government use, these AI-enabled monitors can sift through the vast logs of public-sector systems far faster than any analyst.

- Predictive Threat Intelligence: AI systems continuously crawl open-source and dark-web data to spot emerging risks. By analyzing cybercrime forums and code repositories, they can predict which vulnerabilities attackers will target next, allowing agencies to patch them preemptively. In effect, AI cyber-defenses “hunt” threats before they strike, turning threat intelligence into a proactive shield.

- Automated Incident Response (SOAR): When a threat is detected, AI doesn’t just alert – it can act. Security Orchestration, Automation, and Response (SOAR) platforms increasingly include AI: they can instantly isolate infected machines, revoke or rotate credentials, and reroute network traffic to contain breaches. This drastically cuts down mean time to respond. Whereas human-led response might take hours, an AI-accelerated system can enforce countermeasures within seconds.

- Zero Trust and Continuous Authentication: AI strengthens Zero Trust architectures. By continuously authenticating users through multi-factor and biometrics and analyzing real-time behavior, AI ensures “least privilege” at all times. For example, if an official’s account suddenly tries to transfer funds at odd hours, the system can auto-lock or require re-authentication. Such dynamic access control is crucial as more digital government services go live.

- Enhanced Threat Intelligence and Analytics: Governments generate massive data from sensors, cameras, and transaction systems. AI-driven analytics can process these at scale to find attack patterns that humans miss. Machine learning models continuously update with new threat data, effectively giving agencies a force multiplier in their Security Operations Centers (SOCs).

These AI-powered cybersecurity measures help governments meet new ROI and resilience standards. Industry analysis shows that modern SOC teams aim for time to detect under one hour and false-positive rates below 5%. Achieving this requires AI automation. In 2026, executives expect to demonstrate security not with slideware but with metrics: 20–30% risk reduction when AI tools pair with aggressive upskilling. Notably, 63% of firms now train their staff on AI-cyber tools, effectively upgrading the “human firewall” alongside software. In practice, AI acts as a copilot for human defenders. It filters millions of alerts to highlight real threats, reducing alert fatigue so analysts can focus on strategy.

AI does not replace human experts; rather, it empowers them. Studies emphasize the human role in AI: skilled analysts remain essential for final decisions, ethical judgment, and oversight. In real terms, that means training personnel on AI platforms (so agencies can explore our services for training and integration). AI functions as an assistant an assistant that works 24/7, but humans still set priorities and verify AI findings. This blended human-AI approach ensures that the technology serves public interest and stays transparent, addressing concerns about bias and privacy.

Public Sector Challenges and Emerging Trends

Government technology leaders face unique hurdles in deploying these AI defenses. First, resource constraints loom large. Many state and local IT budgets have been flat or cut, even as threats surge. The Center for Internet Security’s recent funding cuts, for example, have strained state/local monitoring services, leaving agencies more exposed. Staffing shortages compound the problem: a 2025 federal report noted that many agencies remain critically understaffed, unable to implement or even evaluate new AI tools. These issues form part of the AI cybersecurity public sector challenges (limited budgets, legacy systems, and evolving regulations) that experts warn about.

Second, compliance and ethics demand attention. Government data is highly sensitive, and AI relies on large datasets to learn. Poor AI implementation can inadvertently expose citizen PII or violate privacy laws. Agencies must ensure new tools comply with standards (e.g., NIST, CISA, or international GDPR/EU-AI Act rules). To address this, many governments are developing AI governance frameworks. For example, public agencies often inventory AI use and enforce strict access controls. (One new US executive order even requires “truth-seeking” AI procurement.) Internationally, the trend is to mandate explainable AI and audit logs.

Third, shadow AI and human factors are rising concerns. A recent summit of state/local leaders noted the perils of “shadow IT” unauthorized AI tools in use and fear that staff might upload sensitive info to ChatGPT-like services. Leaders emphasize the need for clear policies: many local governments are now seeking guidelines, governance structures and ethical frameworks for AI use in cybersecurity. Robust training is critical so that employees at all levels understand not only how to use AI tools, but when not to – for instance, not feeding classified info into an external AI.

Finally, public-private partnerships are more important than ever. Many successful AI-cyber projects involve collaboration between agencies and tech firms or universities. By pooling expertise, governments can accelerate adoption of digital government solutions securely. For example, initiatives now exist for joint purchasing of managed detection (MDR) services that use AI, and shared “cyber range” exercises for training with simulated AI-driven attacks. At the federal level, agencies are aligning with industry on government AI solutions: flagship programs (e.g. via GSA or DHS) are vetting AI cybersecurity tools for nationwide use. This means that AI implementation in government agencies will likely follow secure-by-design standards.

Despite these challenges, momentum is positive. In discussions of AI in local governments, leaders repeatedly note that public managers have risen to the occasion with creativity. They are combining scarce resources, training, and regional cooperation to deploy AI-enhanced monitoring, detection, and response. And at the federal level, AI is being integrated into national cyber strategies: new AI analytics teams support military and infrastructure defense, scanning for threats across intelligence data. In short, AI in the federal government is focused on strengthening national security, from protecting critical infrastructure to powering intelligence systems.

Future Trends: The Road Ahead

Looking beyond 2026, the trends are clear: AI will only deepen its role in cybersecurity. Organizations are moving toward proactive, automated defenses. For example, we are entering an era of “proactive threat hunting,” where AI agents continuously scan networks for vulnerabilities, patch code in real time, and even simulate attacks to test defenses. New concepts like real-time “Resilience Scores” will become common metrics.

Emerging technologies will compound this shift. Quantum computing, for instance, promises both new encryption (quantum-safe security) and new attack vectors; AI will help develop and defend against both. We expect advanced user analytics (multimodal biometrics, voice/gait recognition) to become mainstream in federal systems, replacing passwords and further reducing fraud. On the attacker side, AI might spawn adversarial tools that probe our models for weaknesses, which will require ongoing adversarial training and red-teaming exercises.

Regulation and standards are also evolving. Governments worldwide are tightening rules on AI use; agencies will need to demonstrate transparency and fairness in their AI-cyber systems. The era of unchecked AI adoption is ending future government AI solutions must include built-in governance. Organizations like NIST, CISA, and international bodies will likely release updated guidance for AI in cybersecurity, focusing on accountability and human rights.

In summary, by 2026 and beyond, the AI cybersecurity landscape will be defined by this balance of innovation and oversight. Forward-looking agencies will integrate AI-driven defenses (from automated analysis to drone-like cyber patrolling) while investing in the skills and policies to use AI responsibly. For technology leaders in government, this means embracing AI tools and building human-AI partnerships.

Key Takeaways for Government Leaders:

- AI is now a double-edged sword: sophisticated attacks and defenses are both AI-driven. Prepare accordingly.

- Invest in AI-enhanced monitoring, analytics, and automated response to meet new speed/scale requirements.

- Address public-sector AI cybersecurity challenges head-on: train your team, secure your data, and follow best-practice frameworks.

- Leverage specialized solutions: modern cybersecurity solutions for government and digital government solutions now often include built-in AI, tailored to agency needs.

- Remember the human role in AI: even the best AI needs expert oversight. Use AI to filter alerts and reveal threats, then let skilled analysts validate and respond.

Conclusion

AI is reshaping threats and defenses simultaneously. The future trends from AI-based proactive hunting to quantum-age encryption will offer enormous power, but only if wielded wisely. Government tech leaders who prepare now will gain a true competitive advantage: “this spending isn’t just a cost it’s the ultimate competitive advantage” in the digital era.

To stay ahead, start by exploring services that blend AI innovation with government standards. Our team offers government AI solutions, cybersecurity solutions for government, and other tailored digital government solutions. We can help integrate AI safely into your security posture. Ready to transform your cybersecurity strategy for 2026 and beyond? Contact us to explore our services and build a resilient, AI-empowered defense for your organization.

Frequently Asked Questions

What is AI cybersecurity and why is it important in 2026?

AI cybersecurity 2026 refers to using artificial intelligence to detect, prevent, and respond to cyber threats in real time. For government agencies, it’s critical because AI can stop advanced threats faster than traditional security tools.

How is AI changing cyber attacks on US government systems?

AI is enabling more advanced cyber attacks on US government networks through deepfake phishing, automated malware, and AI-driven reconnaissance. That’s why agencies now need AI powered cybersecurity to defend at machine speed.

What are the biggest AI cybersecurity public sector challenges?

The biggest AI cybersecurity public sector challenges include legacy systems, limited budgets, workforce shortages, and data privacy requirements. App Maisters helps agencies overcome these challenges with secure, compliant AI implementation.

Does App Maisters provide AI cybersecurity services for public sector agencies?

Yes. App Maisters delivers AI powered cybersecurity, digital government solutions, and government AI solutions designed specifically for federal, state, and local government agencies.

How does AI powered cybersecurity improve threat detection?

AI powered cybersecurity uses machine learning to detect unusual behavior, identify zero-day attacks, and automate responses in seconds. This dramatically reduces response time for government security teams.

Can AI cybersecurity tools be used by local and state governments?

Yes, AI in local governments is growing rapidly. App Maisters provides scalable AI cybersecurity solutions that work for city, county, and state agencies while meeting compliance standards.

Is AI cybersecurity safe for government data?

Yes, when implemented correctly. App Maisters follows NIST, CISA, and ISO-aligned security frameworks to ensure AI in the federal government and state agencies remains secure, transparent, and compliant.

How can government agencies start AI implementation securely?

AI implementation in government agencies should begin with risk assessments, data governance, and pilot deployments. App Maisters supports agencies end-to-end, from strategy to secure deployment.